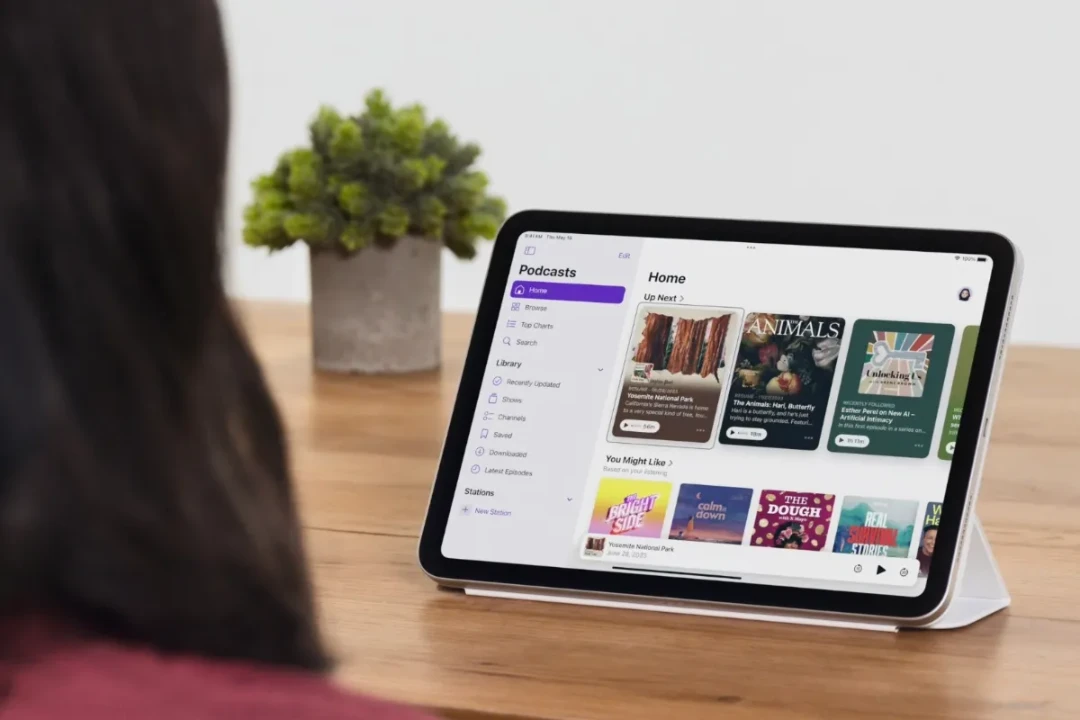

Apple has unveiled groundbreaking accessibility enhancements, including eye-tracking capabilities, set to debut in the upcoming iOS 18 and iPadOS 18 releases later this year. This innovative feature, powered by artificial intelligence, empowers individuals with physical disabilities to navigate their iPhone or iPad solely through eye movements.

Apple Eye-Tracking: Revolutionizing Accessibility in iOS 18 and iPadOS 18

The eye-tracking functionality utilizes the device’s front-facing camera for quick setup and calibration, leveraging on-device machine learning to ensure data security. With no additional hardware required, users can effortlessly navigate app elements and activate functions using Dwell Control, all with their eyes.

Music Haptics: Apple’s Taptic Engine for Enhanced Accessibility

In addition to eye tracking, Apple introduces Music Haptics, catering to deaf or hard of hearing users by translating music into tactile sensations through the iPhone’s Taptic Engine. This feature enhances accessibility across millions of songs in Apple Music and will be accessible via an API for developers to integrate into their music apps.

Vocal Shortcuts and Siri: Expanding Custom Voice Commands in iOS 18

Moreover, Vocal Shortcuts expand Siri’s capabilities by enabling users to create personalized voice commands for launching shortcuts and executing complex tasks on their iPhone or iPad. Furthermore, the Listen for Atypical Speech feature enhances speech recognition, catering to individuals with conditions like cerebral palsy, ALS, or stroke.

These advancements underscore Apple’s commitment to inclusivity and accessibility, revolutionizing the way individuals interact with their devices and ensuring technology is truly accessible to all.